Get the latest climate technology news directly to your inbox.

Salesforce's take on how to make AI more energy efficient

The software company has its eye on open source models and modular algorithms.

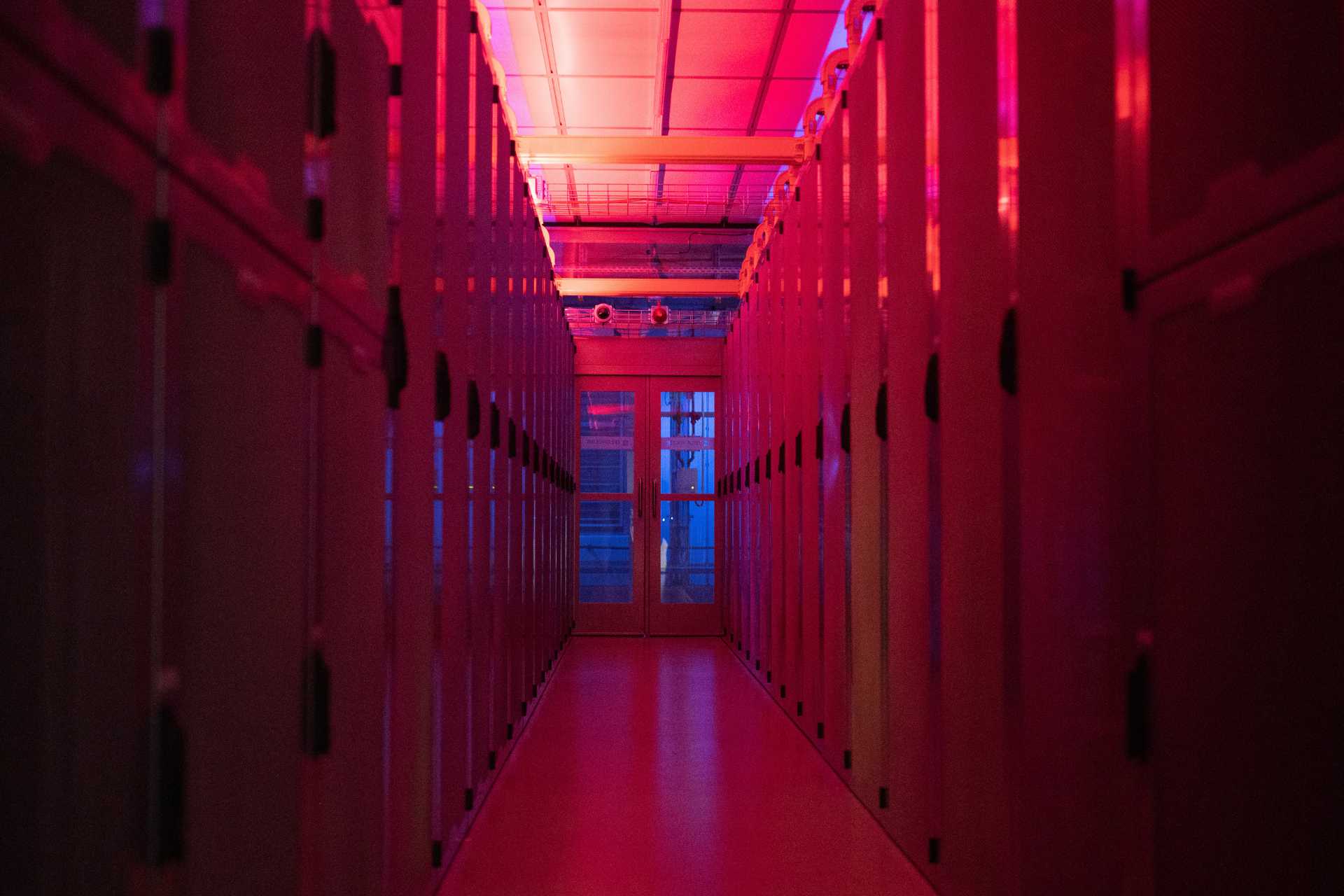

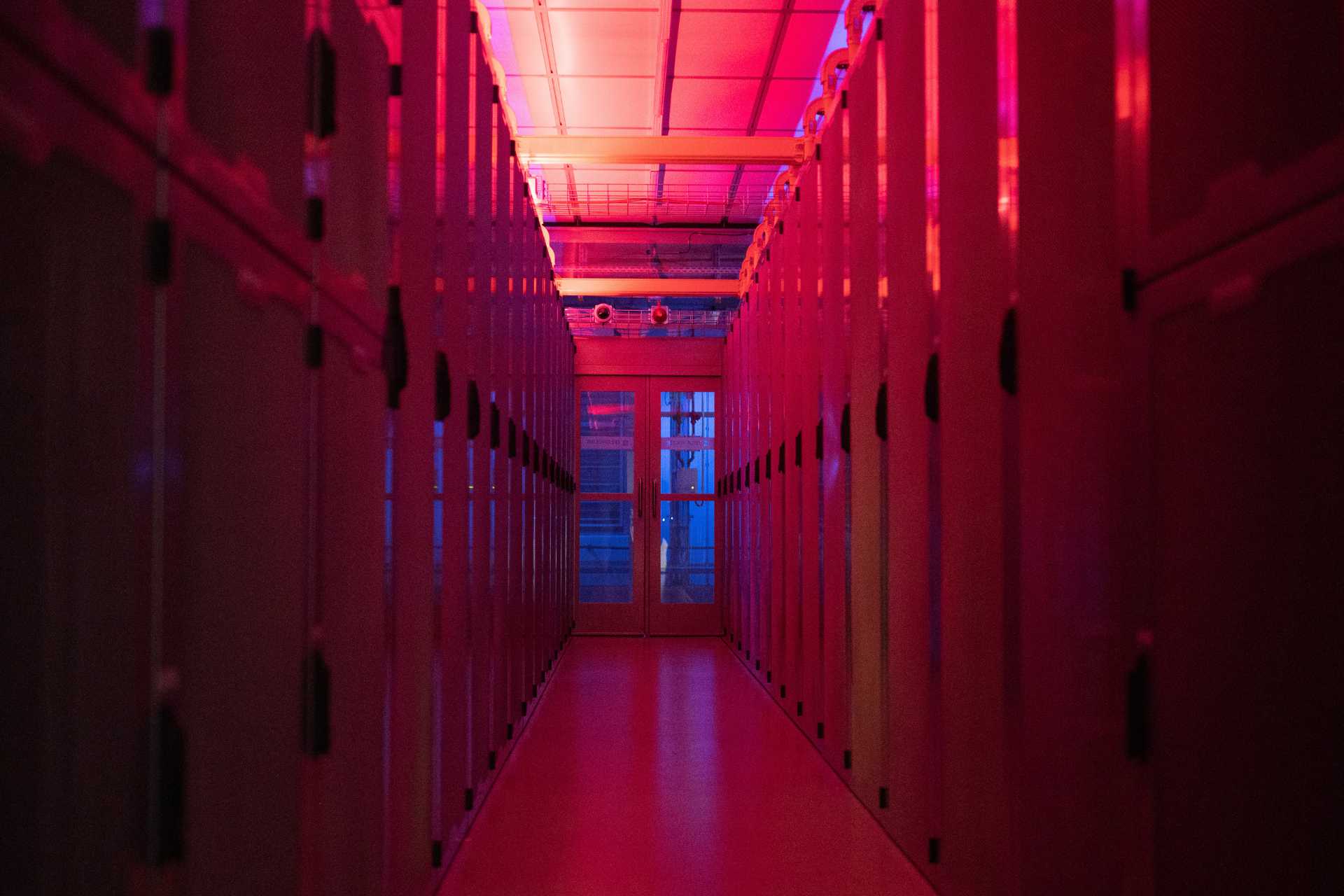

Photo credit: Jana Glose / picture alliance via Getty Images

Photo credit: Jana Glose / picture alliance via Getty Images

In many ways, Salesforce has followed the now-classic trajectory of a Silicon Valley software company: founded in 1999 in a one-bedroom apartment, launched at an event featuring a performance by the B-52s, taken public via IPO in 2004, and now the host of a buzzy event that draws hundreds of thousands of attendees to downtown San Francisco each year.

And while its generative AI inroads via its CRM platform haven’t pulled headlines the way other Big Tech AI moves have, there too Salesforce has followed a similar trajectory to its peers. The company launched “the world’s first generative AI for CRM” in 2023, and now is spotlighting solutions aimed at mitigating the ballooning energy needs of data centers.

Salesforce doesn't own its own data centers, which means its approach to mitigating the energy usage of its software looks a little different than that of Google, for example, which has experimented with demand response pilots at certain data centers, and which in some cases acts as a direct offtaker for renewable energy.

Salesforce uses a mix of colocated data centers, and is increasingly moving its processing to the public cloud — procuring compute rather than a specific spot in a specific data center. And while it has been tapping into data center trends like using more efficient hardware (like Tensor Processing Units) and prioritizing spots in data centers that pull from low-carbon grids, it has also prioritized building open source models, which the company says will ultimately play a key role in downsizing the energy intensity of training algorithms.

Better, not bigger

Boris Gamazaychikov, a senior manager on Salesforce’s emissions reduction team, said the tech industry should be focusing on optimizing its models, and moving away from those with trillions of parameters (and sky-high energy needs). In recent years, he said, the trend has been to add more and more data to models, to the point where some of the largest models to date are essentially trained on the entire internet, and have trillions of parameters.

But that’s starting to change, with many companies, including Salesforce, starting to focus on domain-specific models created for a specific purpose, he added.

“Folks are realizing we don’t need one model to do everything,” Gamazaychikov told Latitude Media. “There’s a new trend emerging around orchestration — having a number of smaller models with specific purposes tied together, with a model on top that’s helping to route amongst the different models.”

Essentially, a handful of “smart” models that are smaller and more modular can be used more efficiently than one giant, inefficient model parsing through the whole internet.

That’s really the primary tactic that the industry should be thinking about when it comes to managing energy use, Gamazaychikov said, because the difference between energy and water use between the largest and smallest models is huge.

Reusing and reducing

Once models are optimized, it’s time to think about how they’re trained and deployed, Gamazaychikov said. And one key way to drive down a model’s energy demand? Do less training. That’s where open source AI should come in, he added.

“Being able to transparently share these models so that we can collaborate together rather than having to start from scratch every time has huge sustainability benefits,” Gamazaychikov said. That’s a trend he says his team is already starting to see: fine-tuning existing, already-trained models as opposed to building new ones.

To illustrate the potential scale of open source’s contributions, he pointed to Llama 2, the second iteration of Meta’s popular open source large language model, which was released last summer. Since its release, 13,000 new models have been created using Llama 2 as a base, Gamazaychikov said. Training all 13,000 models from scratch would have required a massive amount of energy, he added, by Salesforce’s internal calculations resulting in more than 5 million tons of carbon dioxide, or the energy input of 700,000 homes for a year.

Despite the burgeoning open source space, there’s a lot more to be done to leverage its potential benefits: “We really need bigger players to lead with transparency and collaboration,” Gamazaychikov said, and it’s critical for that movement to start now.

“Sure, in the long-term we may see tremendous benefits from AI around climate,” he said. “But in the short term, if we have to stop taking coal plants offline or bring back gas plants to ease grid constraints, that’s going to be really detrimental to our climate goals.”

The bottom line, Gamazaychikov said, is that nobody really knows exactly how much energy load data centers are going to contribute in the coming years.

“But a ton you reduce now is more worthwhile than a ton you reduce in 20 years,” he said.

.jpg)